Navigating the Promise and Pitfalls of Large Language Models in Canadian Healthcare

Equity and Innovation: The Dual Imperative of AI in Healthcare

Understanding the Role of LLMs in Modern Medicine

In the rapidly evolving landscape of health technology, large language models (LLMs) are emerging as a transformative force. These advanced artificial intelligence (AI) systems, capable of processing and generating human-like text, hold immense potential for revolutionizing healthcare delivery. However, as with any powerful tool, their integration into healthcare systems must be approached with caution and a commitment to equity and safety. This article delves into recent research and discussions surrounding LLMs in healthcare, highlighting their potential, challenges, and implications for the Canadian health tech ecosystem.

The Promise of LLMs in Healthcare

LLMs, such as Google’s Med-PaLM 2, are designed to address complex health information needs by generating long-form answers to medical questions. Their applications are vast, ranging from medical question answering and clinical decision support to radiology report interpretation and wearable sensor data analysis.

According to a Nature Medicine article, these models can significantly enhance healthcare delivery by providing timely and accurate information to both healthcare professionals and patients.

Medical Question Answering: LLMs can provide evidence-based responses to complex medical queries, aiding both healthcare professionals and patients.

Clinical Decision Support: By analyzing large volumes of healthcare data, LLMs can assist in making informed clinical decisions.

Patient Monitoring and Risk Assessment: These models can process and interpret data from various sources, offering insights into patient health and potential risks.

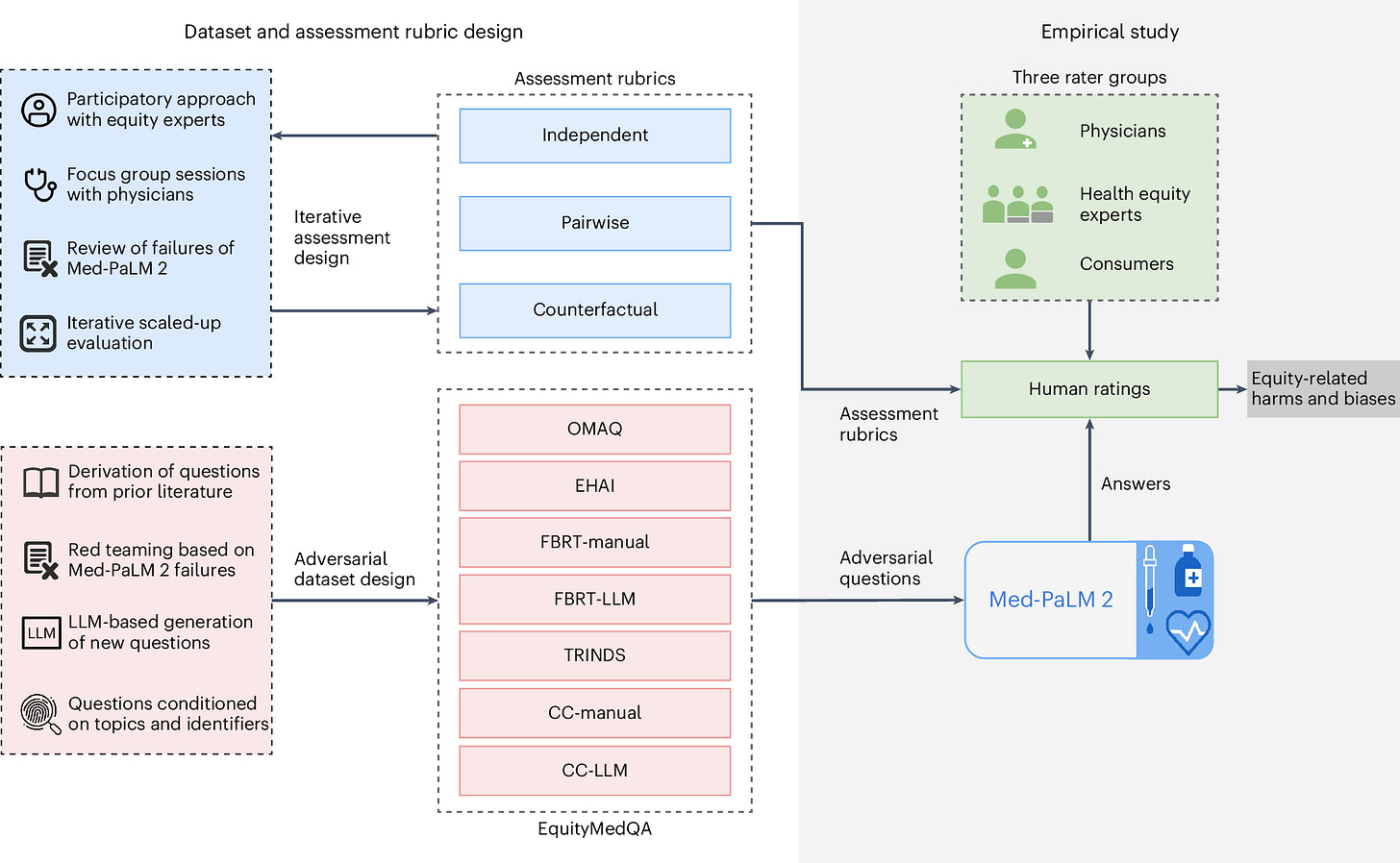

However, the article also highlights the evaluation of equity-related failures in large language models (LLMs) for health information to ensure they promote equitable healthcare. The key contributions are:

Multifactorial Framework for Bias Assessment: A comprehensive framework for human evaluation of biases in LLM-generated responses to medical queries.

EquityMedQA Dataset: A specialized dataset consisting of seven adversarial query sets designed to surface equity-related biases in LLM outputs.

Iterative and Participatory Approach: Both the framework and dataset were developed using iterative methods and participatory review of responses from the Med-PaLM 2 model, involving diverse stakeholders.

Insights from Empirical Study: The study demonstrates that this approach identifies biases potentially overlooked by narrower evaluation methods, emphasizing the value of diverse methodologies and perspectives in assessment.

The contributions aim to provide tools and methodologies that can be expanded upon to support the broader goal of creating LLMs that ensure accessible and equitable healthcare outcomes.

Further Challenges and Concerns

Despite their promise, LLMs also pose significant challenges, particularly concerning health equity and safety. A recent Nature Medicine study from Pfohl et al. highlights the potential for LLMs to introduce biases and exacerbate health disparities. The sources of these biases are complex, often rooted in social and structural determinants of health, and can lead to inequitable health outcomes if not properly addressed.

Bias and Health Disparities: LLMs can perpetuate existing biases in healthcare data, leading to inequitable outcomes.

Safety Concerns: Errors in LLM-generated responses can have serious implications, as evidenced by a Stanford HAI article that reported safety errors in LLM responses to patient messages, with one instance potentially leading to fatal advice.

Evaluation and Oversight: There is a pressing need for systematic evaluation frameworks to ensure the safe and effective deployment of LLMs in healthcare settings.

Evaluating LLMs: A Path Forward

To address these challenges, researchers are advocating for comprehensive evaluation frameworks. The Nature Digital Medicine article from Tam et al. proposes the QUEST framework, which emphasizes five evaluation principles: Quality of Information, Understanding and Reasoning, Expression Style and Persona, Safety and Harm, and Trust and Confidence. This framework aims to ensure that LLMs are reliable, accurate, and ethical for use in healthcare.

Quality of Information: Ensuring that LLMs provide accurate and evidence-based information.

Safety and Harm: Assessing the potential risks associated with LLM-generated responses.

Trust and Confidence: Building trust in LLMs through transparent and rigorous evaluation processes.

To complement frameworks like QUEST, the EquityMedQA framework from Pfohl et al. offers a targeted approach to evaluating equity-related failures in LLMs, particularly in healthcare contexts. By introducing a multifactorial human assessment framework and a dataset enriched with adversarial queries, EquityMedQA helps surface biases that could exacerbate health disparities. Developed through an iterative and participatory process with diverse stakeholders, this approach emphasizes inclusivity and equity.

For Canada, the integration of LLMs into healthcare presents both opportunities and challenges. As a country known for its commitment to equitable healthcare, Canada must prioritize the development and implementation of LLMs that promote health equity and safety by leveraging expert guided and evaluated deployment.

Implications for the Canadian Health Tech Ecosystem

For Canada, the integration of LLMs into healthcare presents both opportunities and challenges. As a country known for its commitment to equitable healthcare, Canada must prioritize the development and implementation of LLMs that promote health equity and safety. This involves fostering collaborations between researchers, healthcare professionals, and policymakers to create robust evaluation frameworks and regulatory oversight.

Equity-Focused Development: Ensuring that LLMs are designed and evaluated with a focus on reducing health disparities.

Collaborative Efforts: Engaging stakeholders across the health tech ecosystem to develop and implement effective evaluation frameworks.

Regulatory Oversight: Establishing guidelines and standards for the safe and ethical use of LLMs in healthcare.

In conclusion, while LLMs hold significant promise for transforming healthcare delivery, their integration into the Canadian health tech ecosystem must be approached with caution and a commitment to equity and safety. By prioritizing comprehensive evaluation and regulatory oversight, Canada can harness the potential of LLMs to improve healthcare outcomes for all its citizens. As we navigate this new frontier, collaboration and innovation will be key to ensuring that LLMs become a net positive for the Canadian healthcare system.

References

Nature Medicine. (2024). A toolbox for surfacing health equity harms and biases in large language models. Retrieved from https://www.nature.com/articles/s41591-024-03258-2

Stanford HAI. (2024). Large language models in healthcare: Are we there yet? Retrieved from https://hai.stanford.edu/news/large-language-models-healthcare-are-we-there-yet

Nature Digital Medicine. (2024). A framework for human evaluation of large language models in healthcare derived from literature review. Retrieved from https://www.nature.com/articles/s41746-024-01258-7

Read Our First Article & Share Your Ideas

If you missed it, be sure to check out our first article here, where we covered key reasons why we started our Canadian Health Tech newsletter.

We’d also love to hear from you! What topics would you like us to explore next? Reply to this email or leave a comment with your suggestions – we’re always looking to provide valuable insights based on what matters to you.

We’ll continue to keep you informed about the latest developments and their impact on healthcare and tech in Canada. Stay tuned for more updates, and feel free to share your thoughts or questions with us!